Elementary discrete mathematics

Table of contents

- Front page

- Summer I 2020 syllabus

- Problems

- 0. What is discrete mathematics?

- PART 1: SETS AND LOGIC

- Part 1 videos

- 1. Sets

- 2. Propositional logic

- 3. Predicate logic

- PART 2: PROOF AND RECURSION

- Part 2 videos

- 4. Proofs

- 5. Recursion and integer representation

- 6. Sequences and sums

- 7. Asymptotics

- 8. Proof by induction

- INTERLUDE

- PART 3: COMBINATORICS

- 9. Introduction to combinatorics

- 10. Ordered samples

- 11. Unordered samples

- 12. Multinomial coefficients

- 13. Probability

- PART 4: RELATIONS

- 14. Boolean matrices

- 15. Relations

- 16. Closure and composition

- 17. Equivalence relations

- 18. Partial orders

- EPILOGUE

0. What is discrete mathematics?

0.1 It’s math you do quietly, right?

The phrase “discrete mathematics” is often used nebulously to refer to a certain branch of mathematics that, from the college point of view, is “not calculus” or perhaps is applicable to computer science. In this section, we will try to understand what it means for a topic to fall under the umbrella of discrete mathematics.

0.2 But really, what is it?

Here’s a question: What is the next number after ? Your answer depends on “where you live,” so to speak. If you live in the rational numbers (numbers that can be expressed as fractions, like ) or the real numbers (including numbers that can’t be written as fractions, like ), you can’t answer the question. If you give be a real or a rational number that’s a little more than , say , I can find something even smaller; say, .

If you live in the set of natural numbers, which is the set of numbers that are useful for counting things in the world, the question does have an answer: . The natural numbers do not suffer from the crowding issue that the rationals and the real do; all of their elements are sufficiently “far apart” in this way. There is , and then , and nothing in between. In this sense they are discrete. In this book, we will (usually) live in the natural numbers.

0.3 Some examples

Here are some questions we might try to answer using discrete mathematics. In fact, you will be able to answer all these questions by the end of the book.

Suppose we have a program that will activate condition 4 if conditions 1 and 2 are met but not condition 3. How do we know when condition 4 is active?

We can use logic to represent this program with the statement , and then use truth tables to determine exactly when condition 4 will trigger.

Which of these two programs is more efficient?

A sequence is a function of natural numbers. An example is the number of steps it takes to run a computer program with inputs. If grows must faster than does, that can tell us that one program scales worse for large quantities of data.

Some members of a community are sick. How do we know who is at risk of being infected?

Relations encode relationships between sets of objects. We may compose relations to iterate those relationships over and over again. If person is sick and has come into contact with person , and has come into contact with person , and has since seen , then is at risk.

0.4 How to use this book

Chapters 1-8 must be done in order. Each chapter has a section of exercises located on the problems page. These exercises should be attempted after each chapter. If you are in MATH 174 at Coastal Carolina University, these exercises are your homework.

Then, chapters 9-13 (on combinatorics) and chapters 14-18 (on relations) may be attempted in that order, or in the order 14-18; 9-13. Be aware that some of the exercises in 14-18 assume that the reader has learned the combinatorial material. So, if you follow the alternate order, leave these exercises for later.

This book is paired with a four playlists on my YouTube channel. Playlist 1 (sets and logic) maps to chapters 1-3, playlist 2 (proof and recursion) maps to chapters 4-8, playlist 3 (combinatorics) maps to chapters 9-13, and playlist 4 (relations) maps to chapters 14-18.

These videos are very short demonstrations and examples of the material pursued in depth by the book. I am not sure which ordering is better: book then videos, or videos then book. Choose your own adventure.

I try to use the invitational “we” throughout the book and maintain some level of formality, but sometimes my annoying authorial voice pokes through. I apologize if that bothers you.

Each chapter ends with several “takeaways” that summarize the chapter. Starting with the fourth chapter, if necessary, each chapter begins with hint to go back and look at some things you may not have thought about in a while.

0.5 The point of this book

– is to teach you discrete mathematics, of course.

But much more broadly, this book is intended to teach you to think mathematically. You may be a student who has never seen any math beyond calculus or algebra, or perhaps you dread doing math. The point of this book is to get you to learn to think deeply, communicate carefully, and not shy away from difficult material.

As you proceed, have something to write with on hand. Work each example along with the book. Then, at the end of each chapter, work the exercises. Some are more difficult than others, but are well worth doing to improve your understanding. Work along with someone at roughly your skill level, share solutions and ask each other questions.

Everyone can be a “math person!” So, let’s get started.

1. Sets

Videos:

1.1 Membership

For nearly all interested parties, sets form the foundation of all mathematics. Every mathematical objects you have ever encountered can be described as a set, from numbers themselves to functions and matrices.

We will say that a set is an unordered collection of objects, called its elements. When is an element of the set , we write .

Examples. Our starting point will be the set of all natural numbers,

Certain sets are special enough to get their own names, with bold or “blackboard bold” face. Sometimes (especially in ink) you will see instead. Note that the elements of the set are enclosed in curly braces. Proper notation is not optional; using the wrong notation is like saying “milk went store” to mean “I went to the store to get milk.” Finally, not everyone agrees that is a natural number, but computer scientists do.

If we take and include its negatives, we get the integers

Notice that in the case of an infinite set, or even a large finite set, we can use ellipses when a pattern is established. One infinite set that defies a pattern is the set of prime numbers. You may recall that a prime number is a positive integer with exactly two factors: itself and . We may list a few: but there is no pattern that allows us to employ ellipses.

Fortunately there is another way to write down a set. All previous examples have used roster notation, where the elements are simply listed. It may be more convenient to use set-builder notation, where the set is instead described with a rule that exactly characterizes this element. In that case, the set of primes can be written as

To provide one last example, consider the set of all U.S. states. In roster notation this set is a nightmare: {AL, AK, AZ, AR, CA, CO, CT, DE, FL, GA, HI, ID, IL, IN, IA, KS, KY, LA, ME, MD, MA, MI, MN, MS, MO, MT, NE, NV, NH, NJ, NM, NY, NC, ND, OH, OK, OR, PA, RI, SC, SD, TN, TX, UT, VT, VA, WA, WV, WI, WY}. But in set-builder notation we simply write

Definition 1.1.1. Two sets and are equal if they contain exactly the same elements, in which case we write .

Remember that sets are unordered, so even .

Definition 1.1.2. Let be a finite set. Its cardinality, denoted , is the number of elements in .

Example. If , then .

We can talk about the cardinality of infinite sets, but that is far beyond the scope of this course.

1.2 Containment

One of the most important ideas when dealing with sets is that of a subset.

Definition 1.2.1. Let and be sets. We say is a subset of if every element of is also an element of .

Examples. Put , , and . Then , , but and .

In mathematics, true statements are either definitions (those taken for granted) or theorems (those proven from the definitions). We will learn to prove more difficult theorems later, but for now we can prove a simple one.

Theorem 1.2.2. Every set is a subset of itself.

A proof is simply an argument that convinces its reader of the truth of a mathematical fact. Valid proofs must only use definitions and the hypotheses of the theorem; it is not correct to assume more than you are given. So the ingredients in the proof of this theorem are simply going to be a set and the definition of the word “subset.”

Proof. Let be a set. Suppose is an arbitrary element of . Then, is also an element of . Because was shown to have any arbitrary element of , then .

Notice that the actual make-up of the set is irrelevant. If has an element, then also has that element, and that is all it takes to conclude . It would be incorrect to try to prove from an example: just because is a subset of itself doesn’t necessarily mean that is!

We will discuss logic and proof-writing at length as we continue. For now, let’s continue learning about sets and proving the “simple” theorems as we go.

Definition 1.2.3. The set with no elements is called the empty set, denoted or .

With a little logical sleight-of-hand, we can prove another (much more surprising) theorem.

Theorem 1.2.4. The empty set is a subset of every set.

Proof. Let be a set. We may conclude if every element of is an element of . Observe that this statement is equivalent to saying that no elements of are not elements of . Because there are no elements of at all, this statement is true; and we are done.

Perhaps you already agree with the central claim of the proof, that there are no difference between the statements "everything in is in " and "nothing in is not in ". If you do not, take it on faith for now. When we study predicate logic later you will have the tools to convince yourself of the claim.

Such a statement that relies on the emptiness of is said to be vacuously true.

When , we say contains or that is a superset of . With the idea of containment in hand we can construct a new type of set.

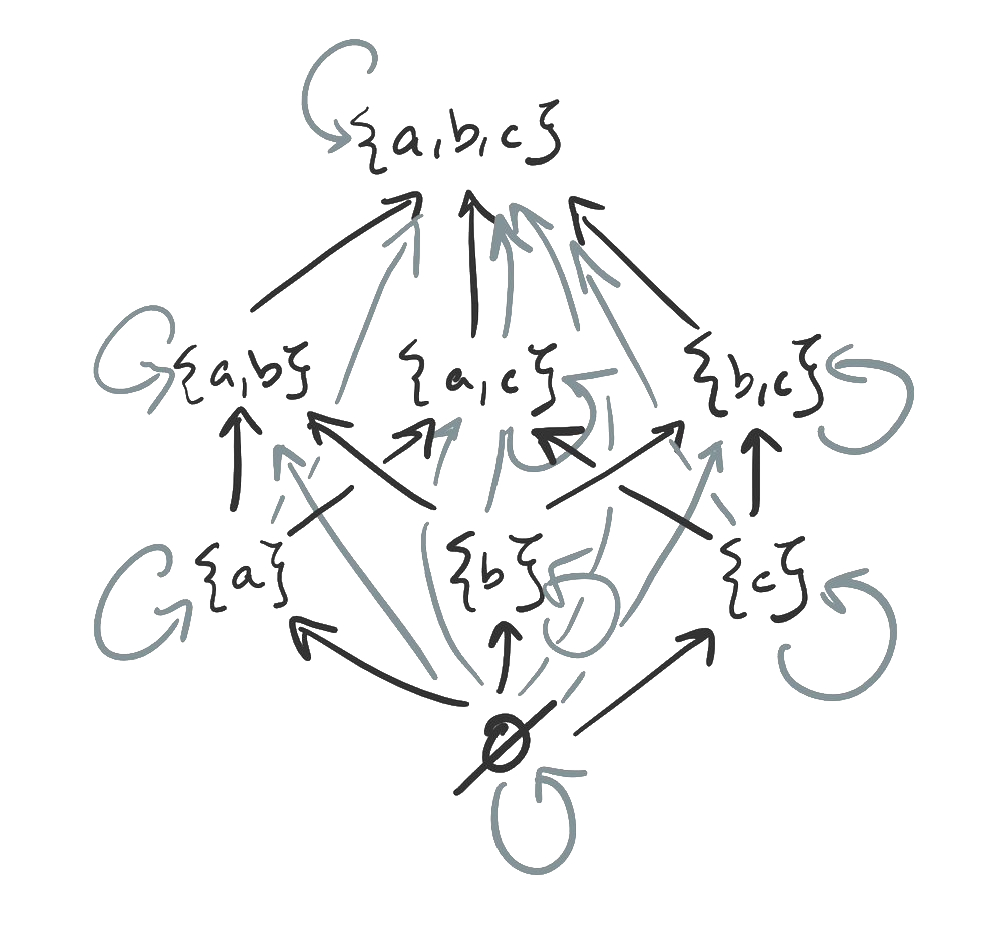

Definition 1.2.5. Let be a set. Its power set is the set whose elements are the subsets of . That is,

Wait - our elements are sets? Yes, and this is perfectly fine! Elements can be all sorts of things, other sets included. When we have a “set of sets” we typically refer to it as a family or a collection to avoid using the same word and over and over.

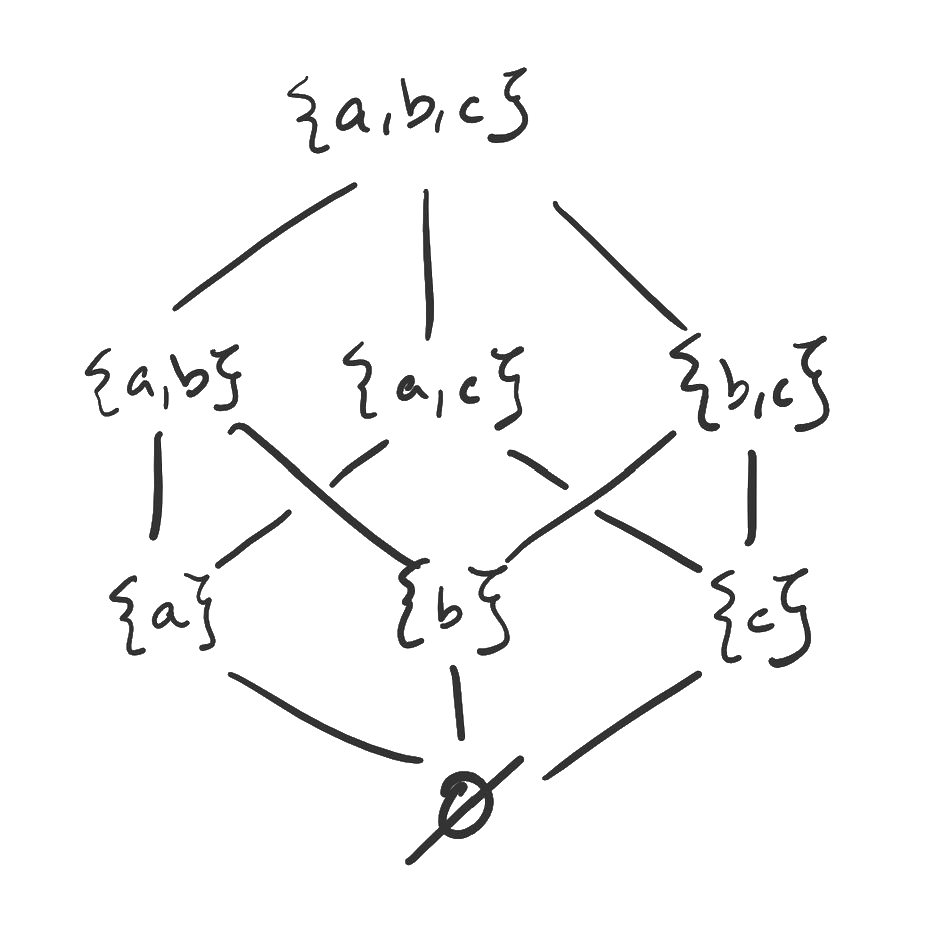

Example. Let . Then

Notice that the number of sets in can be derived from the number of elements in .

Theorem 1.2.6. If is finite, then .

We will come back to prove this theorem much later, once we have learned how to count!

1.3 The algebra of sets

You are used to word “algebra” as it refers to a class where you must solve some equations for . If you think about those classes, they are really about understanding operations and functions of real numbers. Think about it: a successful algebra student will know how to manipulate sums, products, polynomials, roots, and exponential functions – ways of combining and mapping real numbers.

So, “algebra” in general refers to the ways objects may be combined and mapped. In this section, we will see many ways that sets can be combined to produce new sets.

Definition 1.3.1. Let and be sets. Their union is the set of all elements that are in at least one of or ; in set builder notation this is

Definition 1.3.2. Let and be sets. Their intersection is the set of all elements that are in both and ; in set builder notation this is

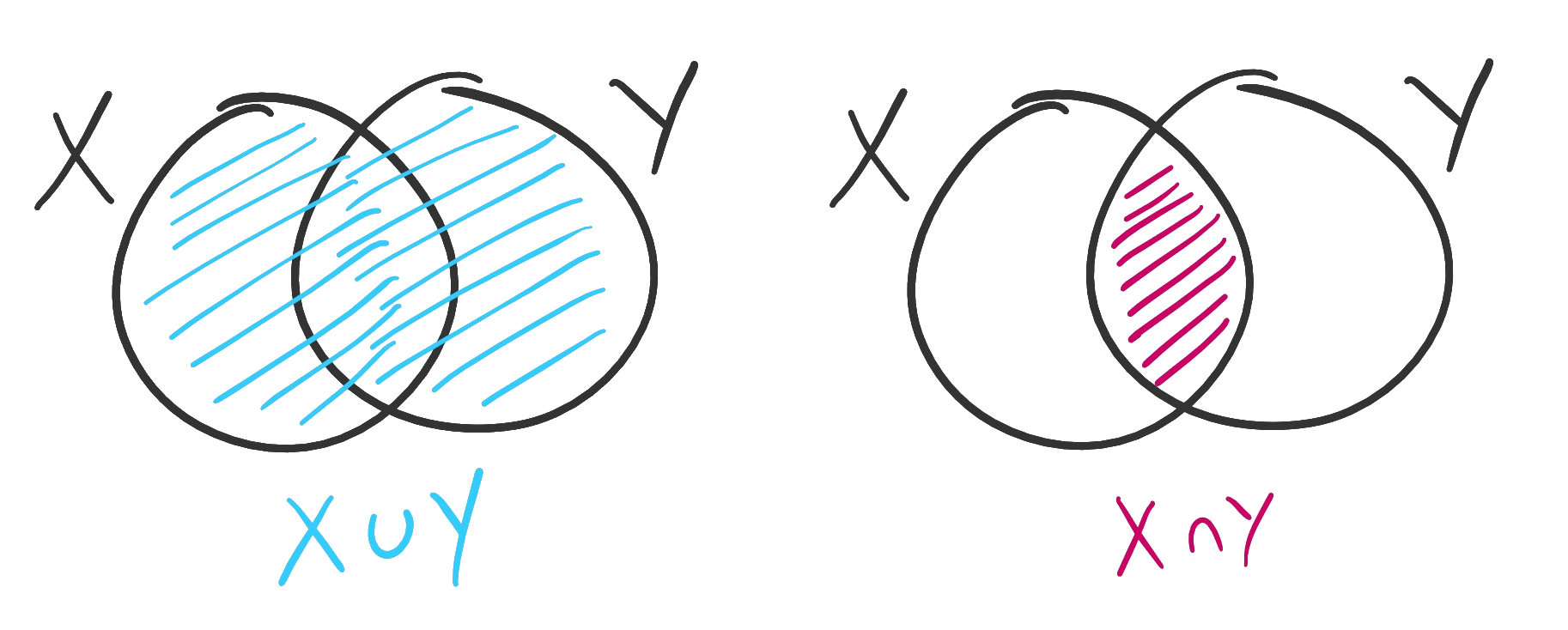

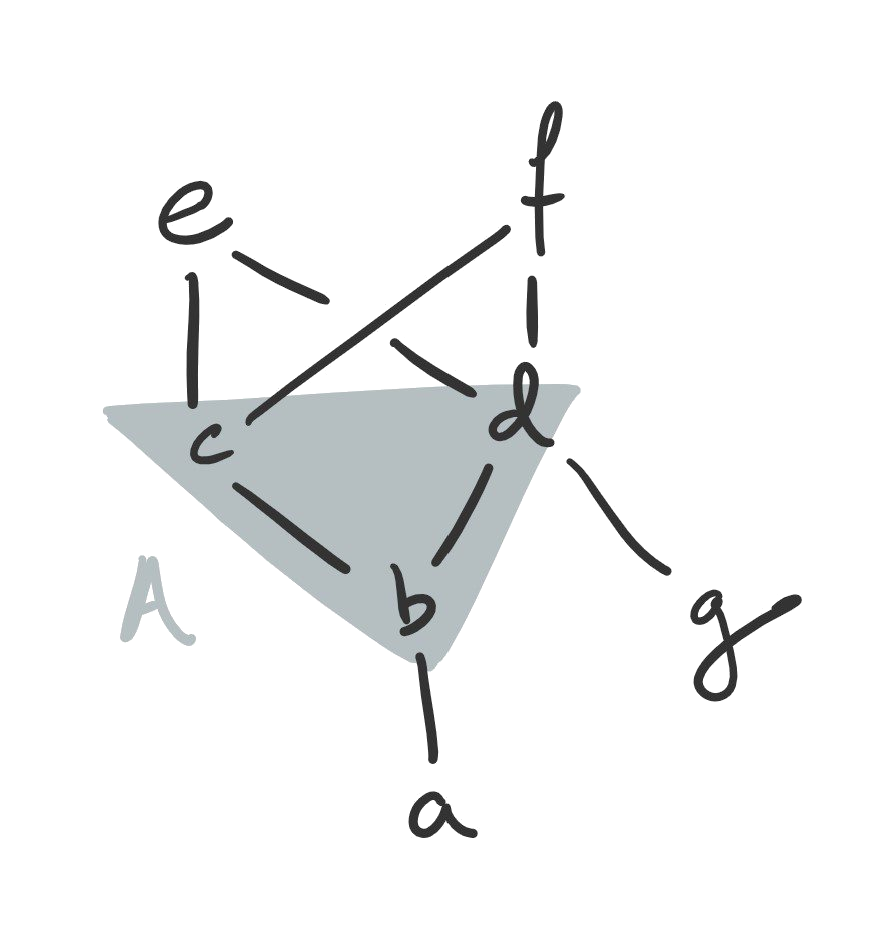

A useful way to visualize combinations of sets is with a Venn diagram. The Venn diagrams for union and intersection are shown below.

Figure. On the left, the union of two sets; on the right, the intersection.

Example. Let and . Their union is

and their intersection is Notice that we do not repeat elements in a set. An element is either in the set or it isn’t. (A generalization, the multiset, will be developed in Chapter 11.) Therefore, (recall, the number of elements in the union) won’t just be .

Theorem 1.3.3. If and are finite sets, then

Proof. If an element is only in , it will be counted by . If an element is only in , it will be counted by . Therefore any elements in will be counted twice. There are exactly of these elements, so subtracting that amount gives .

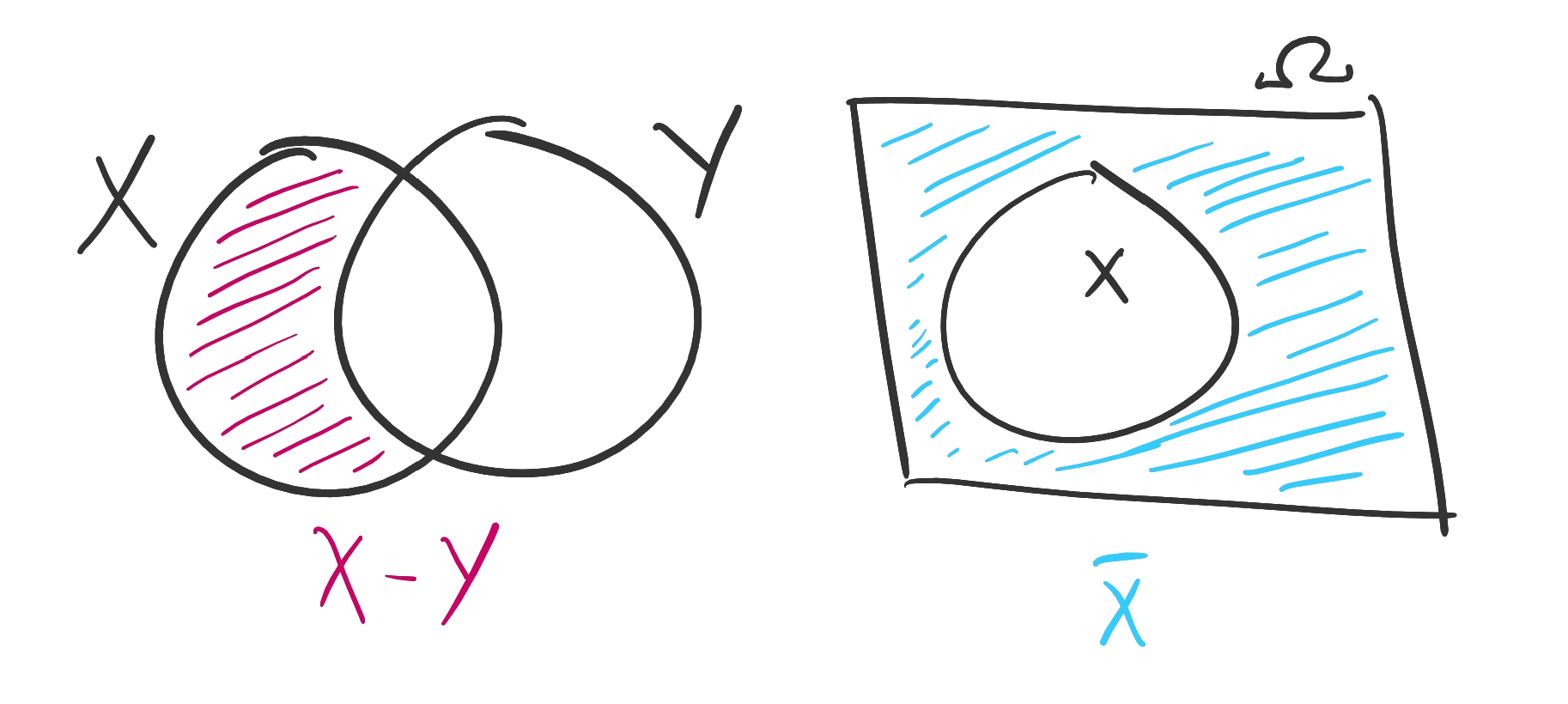

Definition 1.3.4. Let and be sets. The difference is the set of all elements in but not ; i.e.

Example. Like before, let and . Then . Notice that ; just like with numbers, taking a diference is not commutative. (That is, the order matters.)

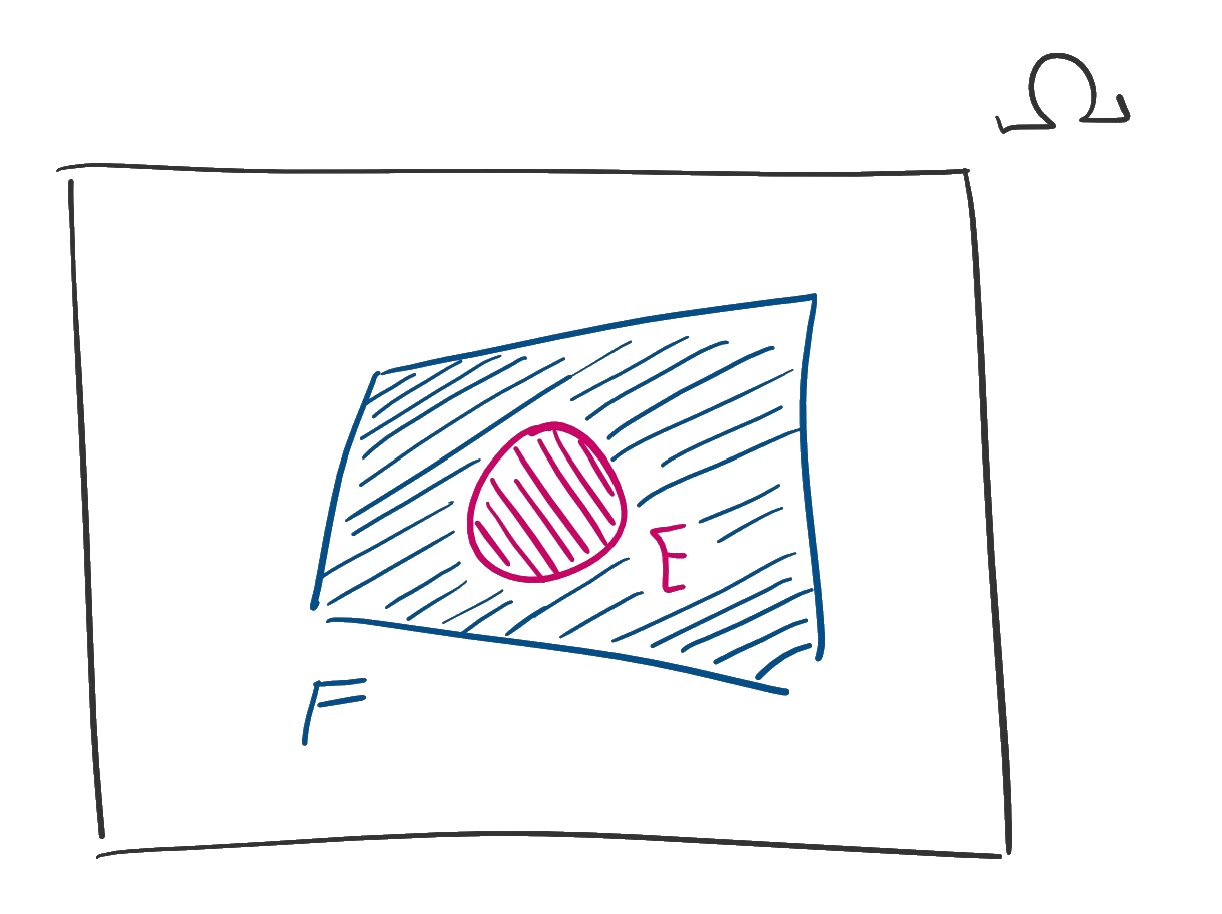

Often when working with sets there is a universal or ambient set, sometimes denoted , that all of our sets are assumed to be subsets of. This set is typically inferred from context.

- In calculus, this set might be .

- If my set is “students taking MATH 174,” my universal set might be the set of all students at Coastal Carolina.

- In probability theory, the sets are events (e.g. “the roll is odd”) and is the sample space (e.g. all possible rolls of the die).

Definition 1.3.5. Let be a set contained in some universal set . The complement of (relative to ), denoted , is all the elements not in . In other words, .

Figure. On the left, the difference of two sets shaded in pink. On the right, the complement of a set shaded in blue.

Example. Suppose is contained in the universal set . Then .

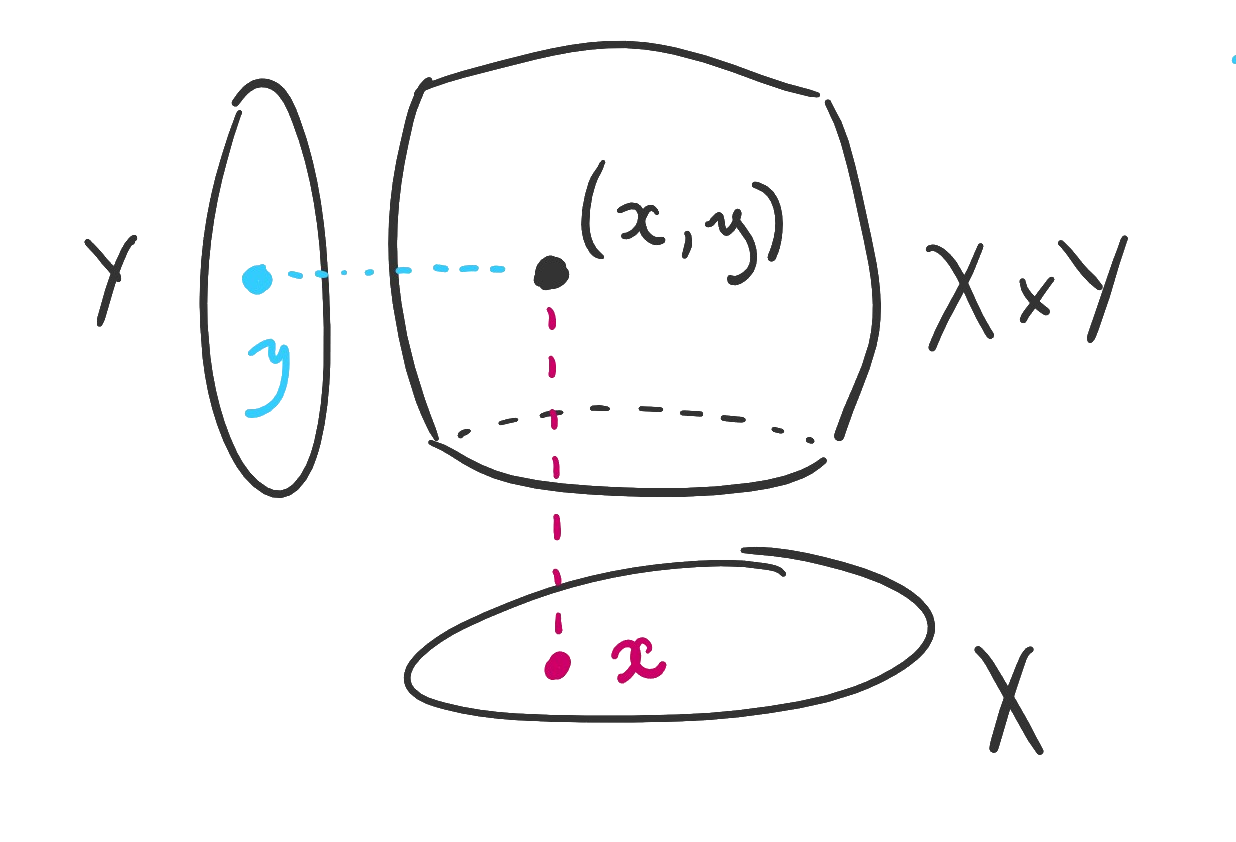

Definition 1.3.6. Let and be sets. Their Cartesian product (in this book, just “product”) is the set The elements of the product are called ordered pairs.

As the name implies, the ordering of the entries in an ordered pair matters. Therefore, we will adopt the convention that unordered structures are enclosed in curly braces and ordered structures are enclosed in parentheses . The immediate consequence to this observation is that is different from .

Figure. A visualization of the product of two sets.

Examples. You are likely familiar with , the Cartesian plane from algebra and calculus. The elements of this (infinite) set are ordered pairs where both and are real numbers.

To make a discrete example, put and . Then

As an exercise, calculate yourself. The pair is an element.

Theorem 1.3.7. If and are finite sets, then .

Proof. The elements of may be tabulated into a rectangle, where each row corresponds to an element of and each column corresponds to an element of . There are rows and columns and therefore elements in the table.

1.4 Operations involving more than two sets

Note: While this section is cool, we will not make use of its content very often in this course. You may choose to skip this section for now and return to it after Chapter 8, when you are more comfortable with the material.

There is one last thing to say about sets (for now, anyway). There is no reason to limit ourselves to two sets in union, intersection, and product. If we have a family of sets , we may take -ary unions, intersections, and products.

The -ary union is the set of elements that are in at least one of the sets .

The -ary intersection is the set of elements that are in all of the sets .

The -ary product is the set of ordered -tuples , where the element is a member of the set .

Suppose instead the family is infinite. We will, exactly once in this book, need to take an infinite union. The infinite union is the set of all elements that are in at least one of the . (Notice that the definition hasn’t changed – just the number of sets!) Likewise the infinite intersection is the set of elements in all the .

Takeaways

- A set is a collection of objects. Given a mathematical object, there is almost definitely a way to define that object as a set.

- A given set has many subsets, the collection of which forms its power set.

- The cardinality of a finite set is the number of its elements.

- There are many ways to combine and manipulate sets: union, intersection, difference, and product.

2. Propositional logic

Videos:

- Negation, conjunction, and disjunction

- Conditional statements

- Compound statements

- Rules of inference

- Logical equivalence

In mathematics, we spend a lot of time worried about whether a sentence is true or false. This is fine in the case of a simple sentence such as “The number is prime.” (That sentence is true.) But there are much more complicated sentences lurking around, and whole constellations of sentences whose truth depends on one another. (An unsolved problem in mathematics is deciding whether the Riemann hypothesis, a statement about the prime numbers, is true. Most people believe it is, and many results in the literature are true given the hypothesis. The Riemann hypothesis is going to make or break a lot of research.)

So, we are interested in tools that will allow us to judge whether complicated statements are true. Such models of truth are called logic.

2.1 Statements and connectives

Definition 2.1.1. A statement is a sentence that is either true or false.

Examples. “The number is prime” and “It is perfectly legal to jaywalk” are statements. Non-statements include questions (“Is it raining?”), commands (“Do your homework”), and opinions (“Mint chocolate chip ice cream is the superior flavor”). It is important to note, not necessarily for mathematical purposes, that opinions are statements of pure taste. Claims whose truth values exist but are unknown are beliefs.

Atomic propositional statements of the type discussed in this chapter are flatly always true or false. You may think of them in the same way you think of constant functions in algebra. They are denoted with lowercase letters , , , etc. If the only statements were atomic, logic would be boring. Fortunately, we can combine statements with connectives. In the next chapter, we will look at predicate statements whose truth values depend on an input. Likewise, these may be thought of as the non-constant functions.

Compound statements arise from combining atomic statements with connectives. They are denoted using Greek letters like (“phi”), (“psi”), and (“gamma”). The truth of a compound statement depends on the connectives involved and the truth values of the constituent statements. A tool for determining the truth of a compound statement is called a truth table.

In a truth table for the compound statement , the left side of the table is the atomic statements involved in and the right side of the table is itself. Each row gives a possible combination of truth values for the atomic statements, and the corresponding truth value for . At this point, it is easier to start introducing connectives and seeing some truth tables.

Definition 2.1.2. Let be a statement. Its negation is the statement , the statement that is true exactly when is false and vice-versa.

The statement is typically read "not " or “it is not the case that is true.” If we have an English rendering of , we try to write in a natural-sounding way that also conveys its logical structure.

Example. Suppose represents the statement “It is raining.” Then, stands for “It is not raining.”

Below is the truth table for .

As you can see, on the left are columns corresponding to the atomic statements involved in (just ), and each row is a possible combination of truth values for these statements. Here is a quick, useful theorem without proof:

Theorem 2.1.3. If the compound statement involves different atomic statements, then its truth table has rows.

Look familiar? We will prove this theorem when we prove the related theorem about power sets later on.

Negation is called a unary connective because it only involves one statement. Our remaining connectives are all binary connectives, as they combine two statements.

Definition 2.1.4. Let and be statements. Their conjunction is the statement , which is true only when and are both true.

The symbol in the conjunction is called a “wedge,” and is read " and ." This definition is meant to be analogous to our English understanding of the word “and.” The statements and are called conjuncts.

Example. Letting be “It is raining” and be “I brought an umbrella,” the statement is “It is raining and I brought an umbrella.” The statement is true only if it is currently raining and the speaker brought an umbrella. If it is not raining, or if the speaker did not bring their umbrella, the conjunction is false.

Here is the truth table for :

Notice that this table is organized intentionally, not haphazardly. The rows are divided into halves, where is true in one half and then false in the other half. The rows where is true are further subdivided into half where is true and half where is false. If there were a third letter, we would subdivide the rows again, and so on. This method of organizing the truth table allows us to ensure we did not forget a row. Our leftmost atomic letter column will always be half true followed by half false, and the rightmost atomic letter column will always alternate between true and false.

Definition 2.1.5. Let and be statements. Their disjunction is the statement , which is true if at least one of or is true.

The symbol in the conjunction is called a “vee” and is read " or ." This definition is meant to be analogous to our English understanding of the word “or.” The statements and are called disjuncts. (Did you notice that I was able to copy and paste from an above paragraph? Conjunction and disjunction share a very similar structure.)

Example. Letting be “It is raining” and be “I brought an umbrella,” the statement is “It is raining or I brought an umbrella.” This time, the speaker only needs to meet one of their conditions. They are telling the truth as long as it is raining or they brought an umbrella, or even if both statements are true.

The truth table for follows.

Of great importance to computer science and electrical engineering, but not so much in mathematics (so it will not appear again in this book), is the exclusive disjunction (or “exclusive-or”). It is a modified disjunction where exactly one of the disjuncts must be true.

Definition 2.1.6. If and are statements, their exclusive disjunction is the statement , which is true if and only if exactly one of and is true.

The statement is read " exclusive-or ."

Example. The statement “You can have the soup or the salad” is likely meant as an exclusive disjunction.

In mathematics, disjunctions are generally considered inclusive unless stated otherwise. (An example for us all to follow!)

Our next connective is probably the most important, as it conveys the idea of one statement implying another. As we discussed earlier in the chapter, mathematics is nothing but such statements. It is also the only connective that is not relatively easy to understand given its English interpretation. So we will begin with the truth table, and think carefully about each row.

Definition 2.1.7. If and are statements, the conditional statement is true if can never be true while is false.

There are many ways to read , including “if , then ,” " implies “, and " is sufficient for .” The statement before the arrow is the antecedent and the following statement is the consequent. Here is the conditional’s truth table.

That is true in the bottom two rows may surprise you. So, let’s consider each row separately.

Example. Let be “You clean your room” and be “I will pay you $10,” so that is “If you clean your room, then I will pay you $10.”

In the case that both and are true, the speaker has told the truth. You cleaned, and you were paid. All is well.

In the case that is true but is false, the speaker has lied. You cleaned the room, but you did not earn your money.

What if is false? Well, then the speaker did not lie. The speaker said that if you clean your room you will be paid. If the room isn’t cleaned, then cannot be falsified, no matter where is true or false.

If that explanation is not sufficient for you, reread the definition of the conditional: being true means that cannot be true without . If you are unsatisfied by the English connection, then consider to be a purely formal object whose definition is the above truth table.

Definition 2.1.8. If and are statements, the biconditional statement is true if and are both true, or both false.

The statement may be read as " if and only if , " is necessary and sufficient for ," or " is equivalent to ." Here is the truth table.

Example. Let be “You clean your room” and be “I will pay you $10,” so that is “I will give you $10 if and only if you clean your room.” (The statements were reordered for readability. We will see later in the chapter that this is the same statement, but this wouldn’t work for .) This time, the clean room and the $10 paycheck are in total correspondence; one doesn’t happen without the other.

2.2 Connecting connectives

We are not required to use one connective at a time, of course. Most statements involve two or more connectives being combined at once. If and are statements, then so are: , , , , and . This fact allows us to combine as many connectives and statements as we like.

How should we read the statement ? Should it be read "; also, if then "? Or, “If and , then ?” Just like with arithmetic, there are conventions defining which connective to apply first: negation, then conjunction/disjunction, then implication, then bi-implication. So, "If and , then " is correct. However, to eliminate confusion in this book we will always use parentheses to make our statements clear. Therefore, we would write this statement as .

Remember that a statement involving letters will have rows in its truth table. The column for the left-most statement letter should be true for half the rows then false for half the rows, and you should continue dividing the rows in half so that the right-most statement letter’s column is alternating between true and false.

Finally, it is a good idea to break a statement into small “chunks” and make a column for each “chunk” separately. Observe the following example.

Example. Write down the truth table for the statement . When is this statement false?

Since the statement has letters, there will be rows to the truth table. Let’s go through the first row very slowly, where all four atomic statements are true. Then is true and is false. Since is true and is false, that means is false. Finally, because its antecedent is true and its consequent is false, the compound statement would be false.

Here is the full truth table. Be sure you understand how each row is calculated.

There are tricks to calculate truth tables more efficiently. However, it wouldn’t do you any good to read them; they must come with practice.

2.3 Inference and equivalence

Mathematics is all about relationships. If the statement is always true, then it tells us that whenever is true then must be as well. If the statement is always true, then it tells us that from the point of view of logic and are actually the same statement. That is, it is never possible for them to have different truth values.

Definition 2.3.1. A tautology is a statement that is always true, denoted .

Definition 2.3.2. A contradiction is a statement that is always false, denoted .

Definition 2.3.3. A rule of inference is a tautological conditional statement. If is a rule of inference, then we say implies and write .

Remember that a conditional statement does not say that its antecedent and consequent are both true. It says that if its antecedent is true, its consequent must also be true.

There are many rules of inference, but we will explore two.

Definition 2.3.4. Modus ponens is the statement that says we may infer from and . Symbolically:

Example. Consider the conditional statement “If you clean your room, then I will give you $10.” If the speaker is trustworthy, and we clean our room, then we may conclude we will be receiving $10.

Another example: “If a function is differentiable, then it is continuous.” (You may know the meanings of these words, but it is not important.) If this statement is true, and the function is differentiable, then without any further work we know that is continuous.

Theorem 2.3.5. Modus ponens is a rule of inference.

Notice that in the definition no claim is made that modus ponens is always true. That is up to us.

Since we know how to use truth tables, we may construct one to show that is always true. Before reading the table below, try to make your own!

Proof. The above table shows that is true no matter the truth of and .

Definition 2.3.6. In the context of conditional statements, transitivity is the rule that if implies and implies , then implies . Or,

The wording of the definition above should you make you suspicious that this is not the only time we will see transitivity. In fact, you may have seen it before…

Example. If you give a mouse a cookie, then he will ask for a glass of milk. If he asks for a glass of milk, then he will also ask for a straw. Therefore, if you give a mouse of cookie then he will ask for a straw.

Theorem 2.3.7. Transitivity is a rule of inference.

We could simply write down another truth table and call it a day. In fact, go ahead and make sure you can prove transitivity with a truth table. Once you’re done, we’ll try something slicker to prove transitivity.

Proof. Suppose that and are true statements, and that moreover is true. By modus ponens, must also be true. Then, is true, by a second application of modus ponens. Therefore, if is true, must be also; this is the definition of .

Pretty cool, right? We can cite proven theorems to prove a new one.

Does it work the other way? In other words: if I know that , does that mean ? Regrettably, no: consider the case there and are true but is false.

Some rules of inference do work both ways. These are called equivalences.

Definition 2.3.8. If is a tautology, then it is an equivalence and and are equivalent statements. We write .

Equivalent statements are not the same statements, but they are close enough from the point of view of logic. Equivalent statements are interchangeable, and may be freely substituted with one another.

We will quickly run through many equivalences before slowing down to prove a few.

Algebraic properties of conjunction, disjunction, and negation

Our first batch of statements should look familiar to you; these are the ways that statements “act like” numbers. As you read each one, try to justify to yourself why it might be true. If you aren’t sure of one, make a truth table.

| Equivalence | Name |

|---|---|

| Double negation | |

| Commutative of conjunction | |

| Commutative of disjunction | |

| Associativity of conjunction | |

| Associativity of disjunction | |

| Conjunctive identity | |

| Disjunctive identity | |

| Conjunctive absorption | |

| Disjunctive absorption | |

| Conjunctive cancellation | |

| Disjunctive cancellation |

Distributive properties

In our numbers, multiplication distributes over addition. This is one fact that is likely implemented twice in your head, once for a number: and once for “the negative” (truly the number ): However, these rules are one and the same; the distributive law. Likewise, we will have distributive laws for negation, conjunction, and disjunction.

| Equivalence | Name |

|---|---|

| DeMorgan’s law | |

| DeMorgan’s law | |

| Conjunction over disjunction | |

| Disjunction over conjunction |

DeMorgan’s law is one of the most important facts in basic logic. In fact, we will see it three times before we are through. In English, DeMorgan’s law says that the negation of a conjunction is a disjunction and vice-versa.

To show that and are equivalent, we can show that the biconditional statement is a tautology. Thinking about the definition of the biconditional gives us another way: we can show that the truth tables of and are the same; in other words, that both statements are always true and false for the same values of and .

Proof (of DeMorgan’s law for conjunction). Let and be statements. The statements and are equivalent, as shown by the below truth table.

Therefore, .

You’ll notice a few columns are skipped in the above truth table. After a few tables, it is more convenient to skip “easy” columns. For example, we know that is true only when and are both true, and false everywhere else. Therefore, we can “flip” that column to quickly get the column for .

As an exercise, prove DeMorgan’s other law.

Properties of the conditional

Finally, we come to the properties of conditional (and biconditional) statements. These do not have “obvious” numerical counterparts, but are useful all the same.

| Equivalence | Name |

|---|---|

| Material implication | |

| False conditional | |

| Contraposition | |

| Mutual implication |

Material implication is the surprising result that the conditional is just a jumped-up disjunction! In fact, DeMorgan’s law, material implication, and mutual implication together prove that all of the connectives we’ve studied can be implemented with just two: negation and one other. However, this would make things difficult to read, so we allow the redundancy of five connectives.

Let’s prove material implication with a truth table, and then we will prove contraposition with another method.

Proof (of material implication). Let and be statements and observe that the truth tables for and are the same:

Therefore, .

There is another way to think about material implication. The statement means that never happens without . Another way to say this is that happens, or else did not; so, .

Before we prove contraposition by the other method, we must justify said method with a theorem.

Theorem 2.3.9. Statements and are equivalent if and only if and are both rules of inference.

The phrase “if and only if” in a mathematical statement means that the statement “works both ways.” In this case, means and are both rules of inference; and conversely, and both being rules of inference means . It means that “” and “” and are both rules of inference" are equivalent statements. Fortunately, we already have a rule that justifies this equivalence.

Proof (of Theorem 2.3.9). Mutual implication says that .

It’s that easy. Whenever we have a conditional statement and its converse (the order reversed), we have the biconditional; likewise, we may trade in a biconditional for two converse conditional statements.

Now we will prove contraposition by showing that implies , and vice-versa. Before you read the below proof, write down these rules: material implication, double negation, and commutativity of disjunction.

Proof (of contraposition). Suppose and are statements and that is true. By material implication, is true. Commutativity says this is the same as . So, we may apply material implication backwards, negating this time, to get ; therefore, implies .

Conversely, suppose is true. Then material implication (and double negation) gives . We may commute the disjunction and apply material implication again, negating , to get .

By mutual implication, .

Takeaways

- Statements may be modified and combined in (mainly) five ways: negation, conjunction, disjunction, implication (the conditional), and bi-implication (the biconditional).

- A truth table allows us to see when a compound statement will be true or false.

- If one statement always implies another, that implication is a rule of inference.

- If two statements are always true or always false together, they are equivalent.

- Rules of inference and equivalences may be justified with truth tables or algebraically.

3. Predicate logic

Videos:

- Predicates

- Introduction to quantifiers

- Quantifiers, continued

- Inference and equivalence with quantifiers

3.1 Predicates

Basic propositional logic as you learned it in the preceding chapter is powerful enough to give us the tools we will need to prove many theorems. However, it is deficient in one key area. Consider the following example.

Example. If Hypatia is a woman and all women are mortal, then Hypatia is mortal.

We can all agree that this statement is a tautology; that is, if it is true that Hypatia is a woman (she was; she was a Greek mathematician contemporary with Diophantus) and if it is true that all women are mortal (as far as we know this is the case) then it must follow that Hypatia is mortal (she was murdered in 415).

So the statement is a tautology. If so, the truth table would confirm it. Let symbolize the statement, where stands for the statement that Hypatia is mortal.

Oh no! Our second row gives that the statement is false when is false but and are true. What gives?

Well, consider the statement “If pigs fly and the Pope turns into a bear, then Bob will earn an A on the exam.” Now it is not so clear that this is a tautology, because pigs, the Pope, and Bob’s grade presumably have nothing to do with one another.

But Hypatia and the mortality of women do. What we will need is the ability to signify that two statements are related, whether in subject (e.g. Hypatia) or predicate (e.g. mortality). This is where predicate logic comes in to help.

Definition 3.1.1. A predicate is a function that takes one or more subjects (members of some domain ) and assigns to them all either true or false .

Predicates are typically denoted with capital letters like , , and . Subjects that are known are given lowercase letters like , , and , unless there is something more reasonable to pick like for Hypatia. Subjects whose values are unknown or arbitrary are denoted with lowercase , , , etc.

The definition of predicate above is much more complicated than it needs to be; this is so that we can take the central idea (a function) and continue using it throughout the book. You know a function: an assigment that gives every input exactly one output . We write to signify that is a function whose inputs are elements of the set and whose outputs are elements of the set .

So, in the above definition, the predicate is a function that takes a subject, a member of the set , and assigns it exactly one truth value.

(Take note that propositions, which you studied in the last chapter, may be regarded as constant predicates – functions whose value is the same regardless of the input, like .)

Examples. Letting be the predicate " is a woman" on the domain of humans and stand for Hypatia, is the statement “Hypatia is a woman.” Since this statement is true, the function assigns to .

Let be the predicate “” on the domain and let be the predicate " orbits " on the domain of celestial objects in the sky. The statement is true, but is false. If stands for the earth and for the sun, then is false but is true.

A predicate, once evaluated to a subject, is a statement. Therefore, these statements may be combined into compound statements using the logical connectives we have just studied.

Examples. As before let be the predicate “” on the domain and let be the predicate " orbits " on the domain of celestial objects in the sky, where stands for the earth and for the sun.

The statements , , and are true.

The statements and ae false.

3.2 Quantifiers

This is all well and good, you think, but what about the statement “All women are mortal”? How can we make “all women” the subject of a statement?

Quantifiers allow us to say that a predicate is true for all, or for at least one undetermined, object in its domain.

Definition 3.2.1 Let be a predicate. The universal statement says that is true for all in its domain. The symbol (“for all”) is called the universal quantifier.

Example. The domain is very important. If is the predicate " takes discrete math" then – “Everyone takes discrete math” – is true on the domain of computer science students at Coastal Carolina, but not, say, on the domain of all people in South Carolina.

Definition 3.2.2 Let be a predicate. The existential statement says that is true for at least one in its domain. The symbol (“there exists”) is called the existential quantifier.

Example. Again let be the predicate " takes discrete math". The existential statement says “Someone takes discrete math.” This is now true for people in South Carolina – pick a student at Coastal, or Clemson, or USC – but not true on the domain of dogs. Wouldn’t that be cute, though?

The statement operated on by a quantifier is called its scope. Without parentheses, a quantifier is considered to apply only to the statement it is next to and no others.

Examples. Let be the predicate " takes discrete math" and be the predicate " is a math major".

- The statement means “There is someone who is taking discrete math and is a math major.” Both and apply to the same .

- The statement says “Someone is taking discrete math and someone is a math major.” The subjects of and are possibly different.

- The expression is not a statement at all, because is not a statement. It needs either a quantifier, or for to refer to a specific, known member of the domain.

There are two important quantified statements: “All 's are 's” and "Some is a ". These statements are all over the place in mathematics. We like to characterize mathematical objects by saying all objects of one type also belong to another; or, by saying we can find an object of one type that is another type.

In “All 's are 's”, the word “all” suggests that the universal quantifier is appropriate. What connective should be used to combine the predicates? Observe that the statement says that every is both a and a . This isn’t what we mean to say; we mean to say if something is a then it will also be a . So, the correct rendering of “All 's are 's” is .

For the statement “Some 's are 's”, we mean to say that there is an object that is at once a and a . Therefore, this time the conjunction is appropriate: we write .

| Statement | Implementation |

|---|---|

| “All 's are 's” | |

| “Some 's are 's” |

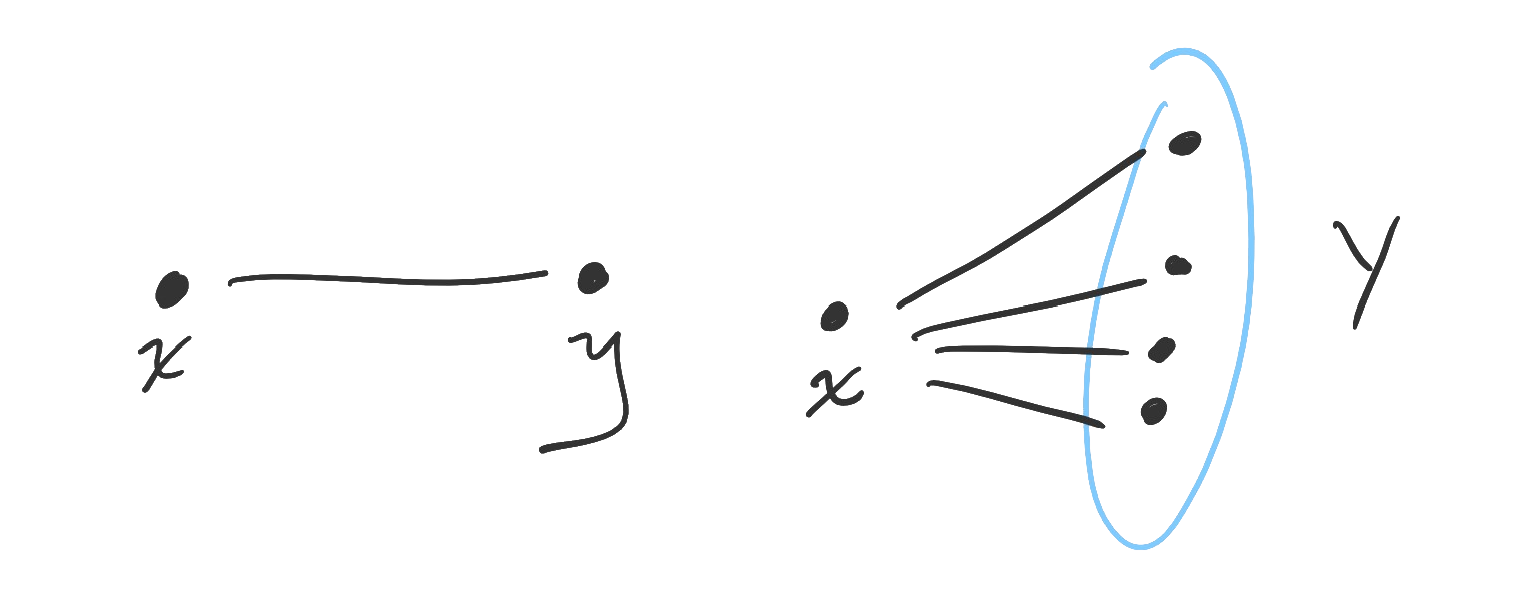

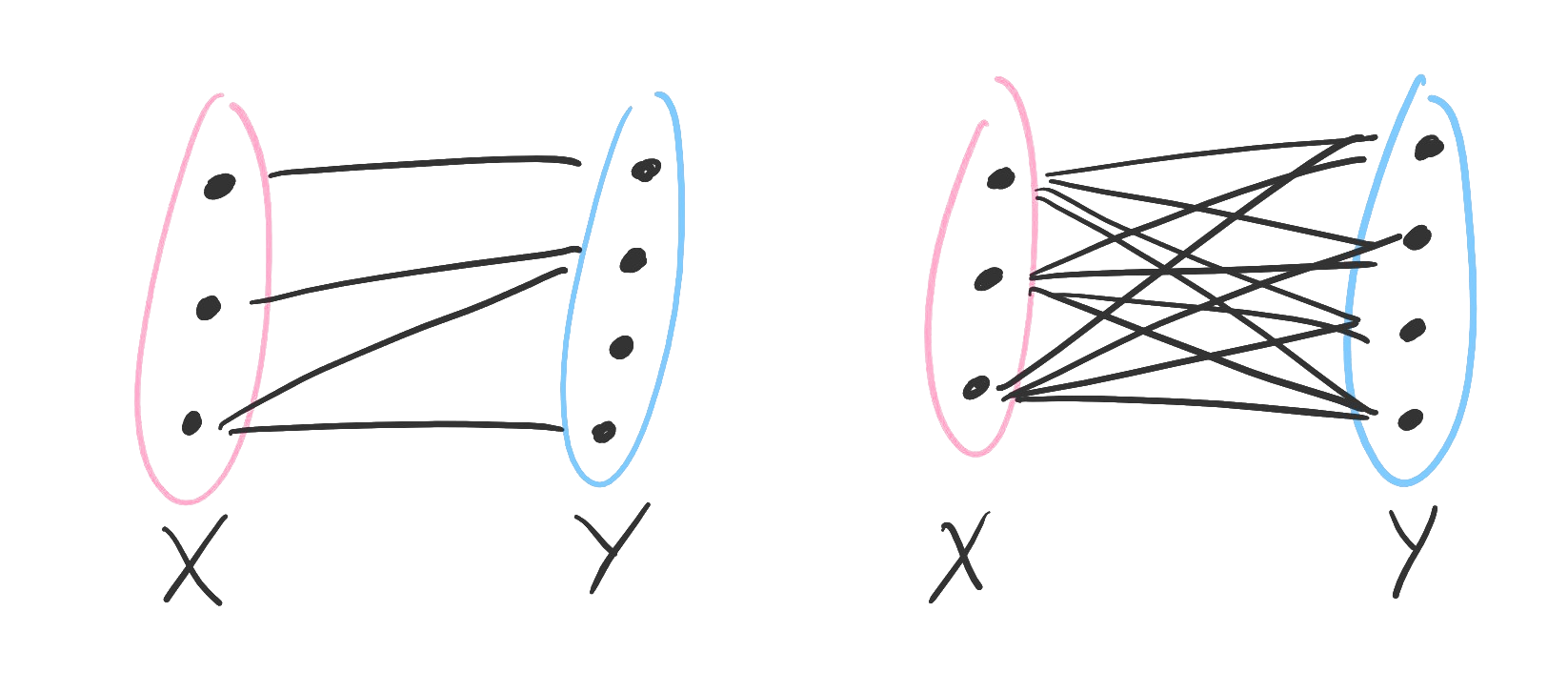

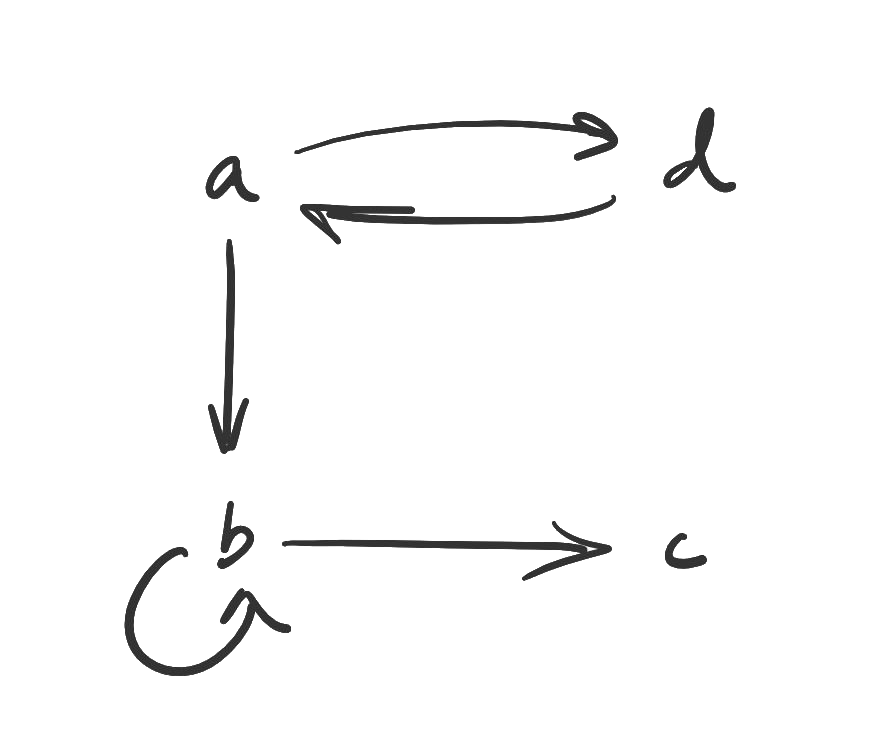

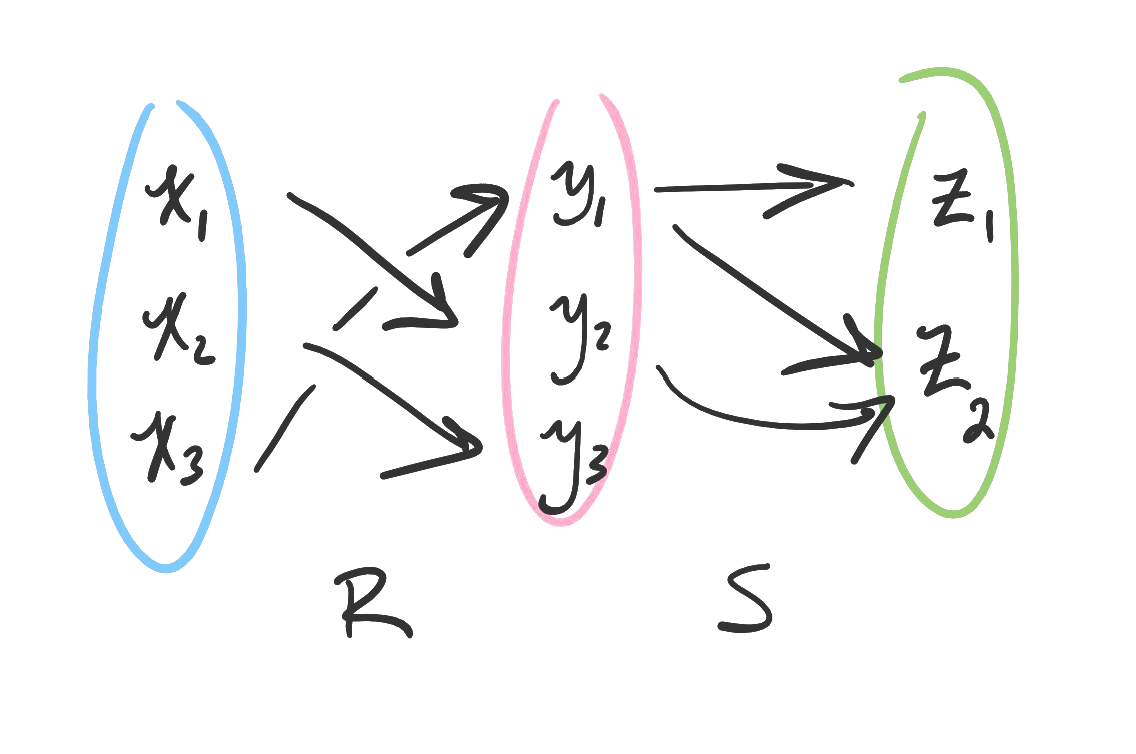

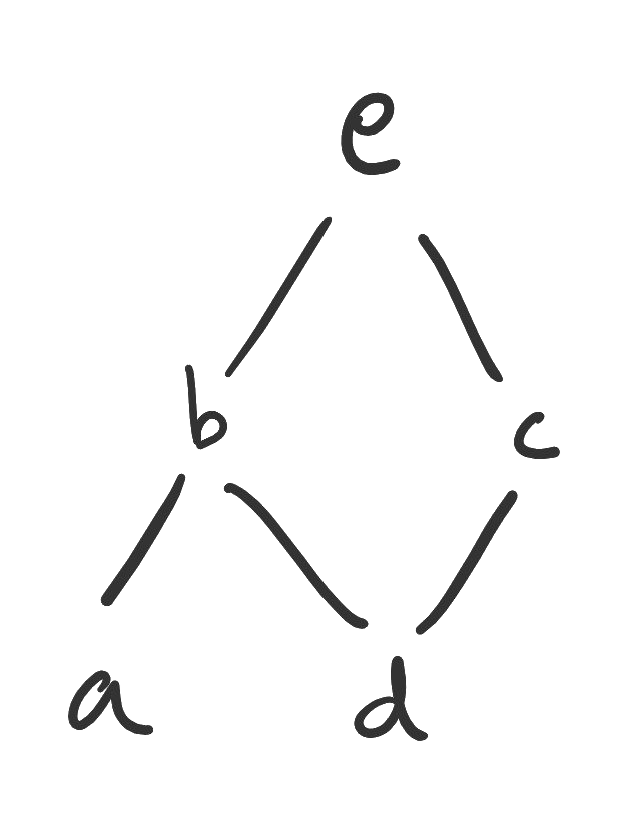

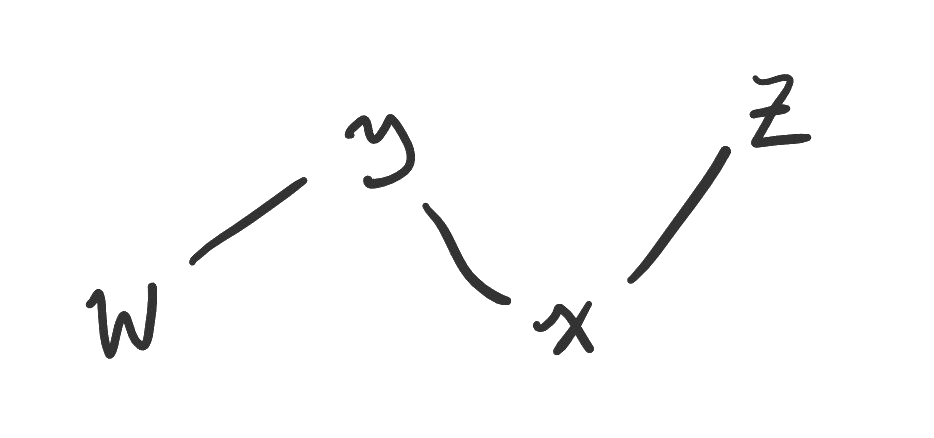

Finally, what is to be done with statements with two or more subjects? The ordering of the quantifiers matters. Here are the four possible combinations of universal and existential quantifiers for two subjects, as well as a diagram corresponding to each.

Examples. Let be the predicate " and are classmates" over the domain of students at some school. Note that, in this case, the order of the subjects does not affect the truth of the statement.

The statement means “There are two students who are classmates.” In other words, there is at least one student who is paired with at least one other student. Some writers say instead, but when the quantifiers are the same we will “collapse” the notation.

Figure. On the left, one object is paired with one object (existential-existential). On the right, one object is paired with many objects (existential-universal).

The statement means “There is a student who is classmates with every student.” We have one student who (somehow!) is classmates with the entire student body.

The statement means “Every student has a classmate.” Notice that their classmate need not be the same person. If we quantify universally first, then each existential object may be different. In the preceding example, we quantified existentially first, so that one person was classmates with everybody.

Figure. On the left, many objects are each paired with an object (universal-existential). On the right, many objects are paired with many objects (universal-universal).

The statement means “Every student is classmates with every other student.” Every object in our domain is paired up with every other object in the domain, including themselves! (Don’t think too hard about that for this example.)

3.3 Inferences and equivalences for quantifiers

Quantifiers evaluated on a predicate are statements, since they are functions to . Therefore, some quantified statements imply others, and some pairs imply each other. In this section we will learn a few important rules for quantifiers, before finally proving that our opening statement about Hypatia is indeed a tautology.

Theorem 3.3.1 (DeMorgan’s laws) Let be a predicate. Then and .

Does that name look familiar? Here is our second application of DeMorgan’s laws. (The third will appear in the exercises.) You will see how they are used in the proof.

Proof. Consider the universal statement on the domain . We may rewrite

and

Therefore,

Using DeMorgan’s law,

which is the same as the statement .

That may be proven in the same way.

The idea is that a universal statement is a “big conjunction” and an existential statement is a “big disjunction.” The proof follows from the DeMorgan’s laws you learned in the preceding chapter .

The next two theorems deal with how universal and existential statements may imply one another.

Theorem 3.3.2 (Universal instantiation) If is a member of the domain of the predicate , then .

Proof. If is true for every member of its domain and is a member of said domain, must be true for .

Theorem 3.3.3 (Existential generalization) If is a member of the domain of the predicate , then .

Proof. Let be in the domain of . If is true, then is true for some member of its domain.

There are two comments to make about these two theorems. First, universal generalization and existential instantiation are not valid:

Examples. “I was able to easily pay off my college loans, therefore everyone can” is an example of invalid universal generalization.

“Someone in this room killed the wealthy magnate, so it must have been you!” is an example of invalid existential instantiation.

Finally, transitivity gives us that a universal statement implies an existential one: .

We are ready to prove our tautology is, in fact, a tautology. Remember that the statement was “If Hypatia is a woman and all women are mortal, then Hypatia is mortal.”

We have seen that “Hypatia is a woman” may be rendered as for the predicate being " is a woman" and being Hypatia. Likewise, “Hypatia is mortal” could be rendered where is " is mortal". Finally, the statement “All women are mortal” would be .

Theorem 3.3.4 The statement is a tautology.

We have changed our labeling to the more standard , , and to emphasize that this statement is always true no matter what we are talking about.

Proof. Suppose that for some predicate and some in the domain of that is true, and that for some predicate the statement is true.

By universal instantiation, means that is true.

By modus ponens on and , we know must be true.

Therefore, is always true.

Takeaways

- If we need to communicate that two statements talk about the same thing, propositional logic won’t cut it: we need predicates.

- A predicate is a function that takes an input and returns true or false. The predicate itself isn’t a statement, but it is once it’s evaluated on a subject.

- A quantifier yields a statement that a predicate is true for some, or all, objects in its domain.

- "Every – is a – " is a universal conditional statement. "Some – is a – " is an existential conjunction.

- A universal statement implies a specific one, which implies an existential statement.

- The negation of a universal statement is an existential statement and vice-versa.

Proofs

Review:

- Chapter 2: Inferences and equivalences for conditional and biconditional statements

- Chapter 3: Inferences and equivalences for quantifiers

Videos:

Now we have things to talk about – sets and their elements – and ways of talking about those things – propositional and predicate logic. This opens the door to the true game of mathematics: proof.

Mathematics stands apart from the natural sciences because its facts must be proven. Whereas theories in the natural sciences can only be supported by evidence, mathematical facts can be shown to be indisputably true based on the agreed definitions and axioms.

For example, once you have agreed upon the definitions of “integer,” “even,” and the rules of arithmetic, the statement “An even integer plus an even integer is an even integer” is without doubt. In fact, you will prove this statement yourself in the exercises to this chapter.

Now, this introduction is not meant to imply that mathematical epistemology (the study of knowledge) is without its issues. For example: What definitions and axioms are good ones? (“Is there such a thing as a real number?” can entertain you for a while.) What sorts of people decide which mathematical research is worth doing, and who decides which proofs are convincing? Are all published mathematical proofs correct? (They aren’t.) What is the role of computers in mathematical proof?

Fortunately for us, we don’t have to worry about these questions. (Unless we want to. They’re quite interesting.) In this chapter we will simply learn what a mathematical proof is, learn some basic facts about the integers, and practice writing direct and indirect proofs about mathematical facts that are not particularly in dispute.

4.1 Direct proofs

What is a proof? In this book we will adopt Cathy O’Neil’s position that a proof is a social action between speaker/writer and listener/reader that convinces the second party that a statement is true.

Several proofs have been written in this book so far. Many begin with an italicized Proof. and end in a little , as a way to set them off from definitions, theorems, comments, and examples. Hopefully they have all been convincing to you. (DW: If not, my e-mail address is somewhere on this webpage.)

As you practice writing your own proofs, take for an audience someone else in your position: a new student of discrete mathematics. Imagine the reader knows slightly less than you do. A good proof gently guides the reader along, making them feel smart when they anticipate a move.

It is also this book’s position that talking about proofs is nearly useless. They must be read and practiced. With that in mind, let’s get to writing some proofs about integers.

We will take for granted that sums, differences, products, and powers of integers are still integers. (Depending on how the set of integers is defined, these qualities may be “baked in” to the definition.) Every other fact will either be stated as a definition or a theorem, and proven in the latter case.

Definition 4.1.1. An integer is even if there exists an integer such that .

Definition 4.1.2. An integer is odd if there exists an integer such that .

For examples, , and .

Theorem 4.1.3. If an integer is odd, so is .

This statement may be obvious to you. If so, great; but we are trying to learn about the writing, so it is best to keep the mathematics simple. (It will only get a little more challenging by the end of the chapter.) To prove this statement, we must start with an arbitrary odd integer and prove that its square is odd. The integer in question must be arbitrary: if we prove the statement for 3, then we must justify 5, 7, 9, and so on.

Proof. Let be an odd integer.

The first step in the proof is to assume any of the theorem’s hypotheses. The theorem says that if we have an odd integer, its square we will be odd; so, we are entitled to assume an odd integer. However, there is nothing more we may assume. Fortunately, we know what it means for an integer to be odd.

That means there exists an integer such that .

A great second step is to “unpack” any definitions involved in the assumptions made. We assumed was odd. Well, what does that mean? However, now we are “out of road.” Let’s turn our attention to the object we mean to say something about; .

Therefore, which is an odd number by definition.

Remember: sums, powers and products of integers are integers – this is the only thing we take for granted, other than, y’know, arithmetic – and so is an integer, call it . Then is odd. The final step – obviously rendering the result in the explicit form of an odd number – is a good one to take because we assume our reader to be as new at this as we are. They likely recognize is odd, but we take the additional step to ensure maximum clarity.

Thus, if is an odd integer so is its square.

Definition 4.1.4. Let be a nonzero integer and let be an integer. We say divides or that is divisible by if there exists an integer such that . We write when this is the case.

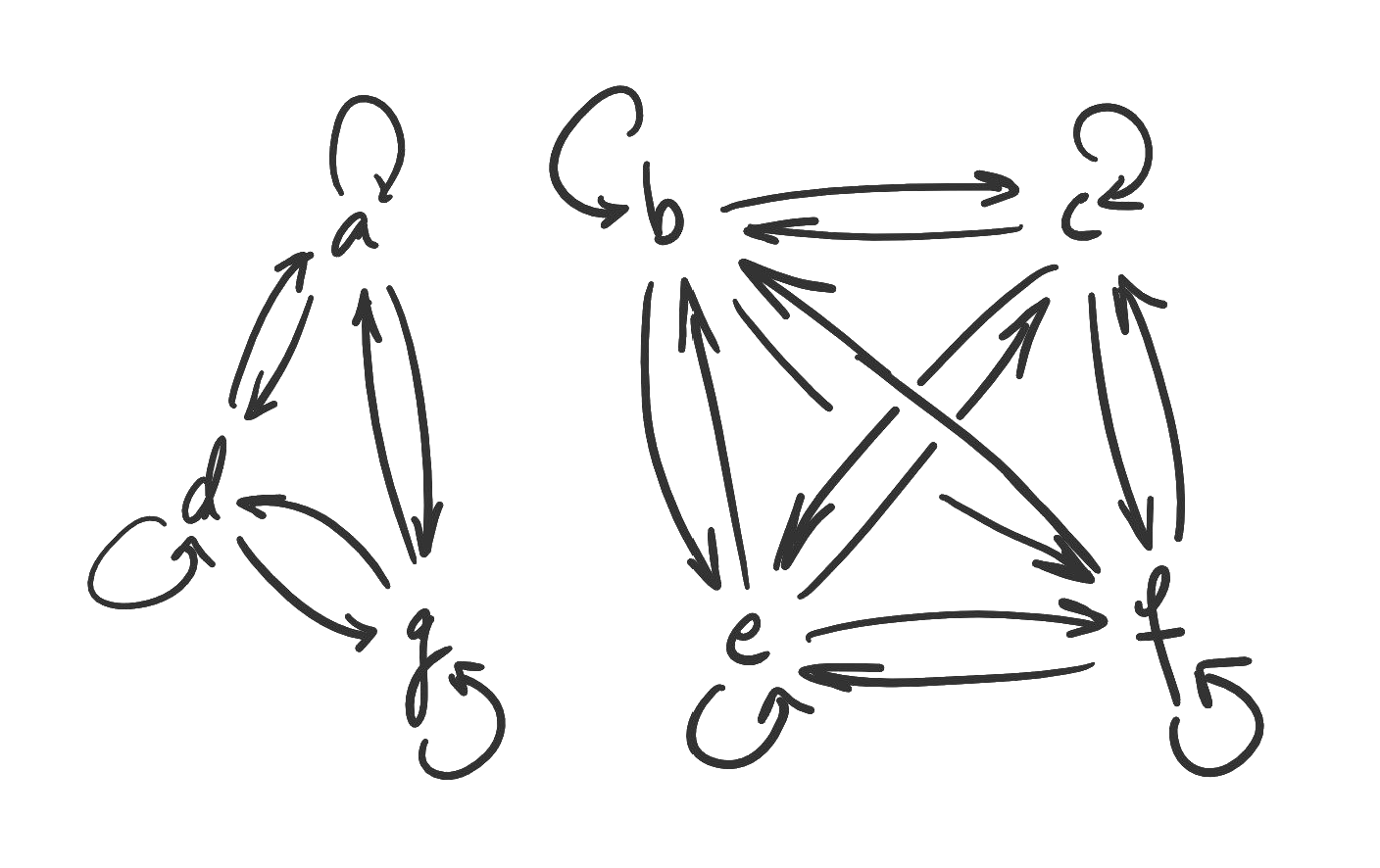

Theorem 4.1.5. Divisibility has the following three properties:

- For all integers , . (reflexiveness)

- For all positive integers and , if and then . (antisymmetry)

- For all integers , , and , if and then . (transitivity)

(These three properties together define something called a partial order, which is studied in the last chapter. Foreshadowing!)

You will prove two of these properties for yourself in the exercises. Here is a demonstration of the proof of the second property.

Proof. Suppose that and are positive integers where and .

As before, we are assuming only what is assumed in the statement of the theorem: that and are positive divide one another. By the way: why did we have to say they are positive?

That means that there exist integers and such that and .

The second step is usually to apply relevant definitions.

Combining these two statements, we have . Since is not zero, we see . Because and must be integers by definition, the only way that is for .

If we didn’t have the restriction that and were integers, then . However, this is only possible for integers when both numbers are one.

Therefore, if and are positive integers that divide one another, then .

In the next section we will explore indirect proofs and, from there, bidirectional proofs. You are highly recommended to try some exercises before moving on.

4.2 Indirect and bidirectional proofs

Consider the following theorem.

Theorem 4.2.1 If is an integer such that is odd, then is odd.

This theorem may seem the same as Theorem 4.1.3, but note carefully that it is not: it is the converse to the previous theorem’s .

Let’s try to imagine a direct proof of this theorem. Since is odd, there exists an integer such that . And then, seeking to prove a fact about , we take the positive square root of each side: . And…ew. Have we even proven anything about square roots? (We haven’t, and we won’t.)

We shall, instead, employ some sleight of hand. Recall from Chapter 2 that a conditional statement is equivalent to its contrapositive .

Proof (of Theorem 4.2.1). Suppose that is even. Then there exists an integer such that . In that case, which is even. Therefore for to be odd, must also be odd.

Another equivalence from Chapter 2 was that and combine to create . That is, we can prove an “if and only if” statement by proving both the “forward” and “backward” directions (which is which is a matter of perception). Therefore, in this chapter we have proven the following statement:

Theorem 4.2.2 An integer is odd if and only if is.

Remember that an “if and only if” statement is true if the component statements are both true or both false. So, this theorem also tells us that an integer is even if and only if its square is.

Theorem 4.2.3 Let be an integer. The integer is odd if and only if is even.

Proof. Let be an integer. First, suppose is odd. That means that there exists an integer where . So, which may be written as which is even. Therefore if is odd then is even.

Conversely, suppose is even. That means that for some integer . In that case, which is which is odd. Therefore for to be even, must be odd.

So, is odd if and only if is even.

4.3 Counterexamples

When proving a universal statement on a domain, it never suffices to just do examples (unless you do every example, which is usually not feasible or possible). However, remember from Chapter 3 that the negation of a universal statement is an existential one. You can prove that "every is a " is false by finding just one that isn’t a .

Examples. The statement “all prime numbers are odd” is false because is prime and even.

The statement “all odd numbers are prime” is false because is odd and not prime.

Takeaways

- A proof is a social action between speaker and listener. Keep your audience in mind as you write - try to make them feel smart! If you are taking a class, your proofs should be written for your classmates.

- If you are trying to prove that a statement is true for every object of a particular type, it is never appropriate to just do a couple of examples.

- In a direct proof of , you should start by assuming any hypotheses and unpacking the relevant definitions before arriving at the conclusion via valid reasoning.

- Sometimes it is hard to prove directly, in which case maybe you should try proving the contrapositive .

- You can prove by proving and .

- You have to practice proof writing a lot. (DW: I wasn’t a good proof writer until the end of graduate school, and one may argue I am still not a good proof writer.)

5. Recursion and integer representation

Videos:

One of the most important ideas in discrete mathematics is that of recursion, which we will explore in one way or another for many of the remaining chapters in the course. In this chapter, we will explore it through the idea of integer representation; representing integers in bases other than the common base 10. Bases you are likely to encounter in computer science contexts are base 2 (binary), base 8 (octal), and base 16 (hexadecimal). We will learn how to represent an integer in binary using a recursive algorithm, and then we will cheat our way into octal and hexadecimal.

5.1 Recursion

Recursion is when an algorithm, object, or calculation is constructed recursively.

Just kidding. Reread this joke after the chapter and it’ll make more sense.

Recursion means that an algorithm, object, or calculation is written, defined, or performed by making reference to itself. It is something more easily understood by example, and there will be plenty as we proceed. For now, consider the following example.

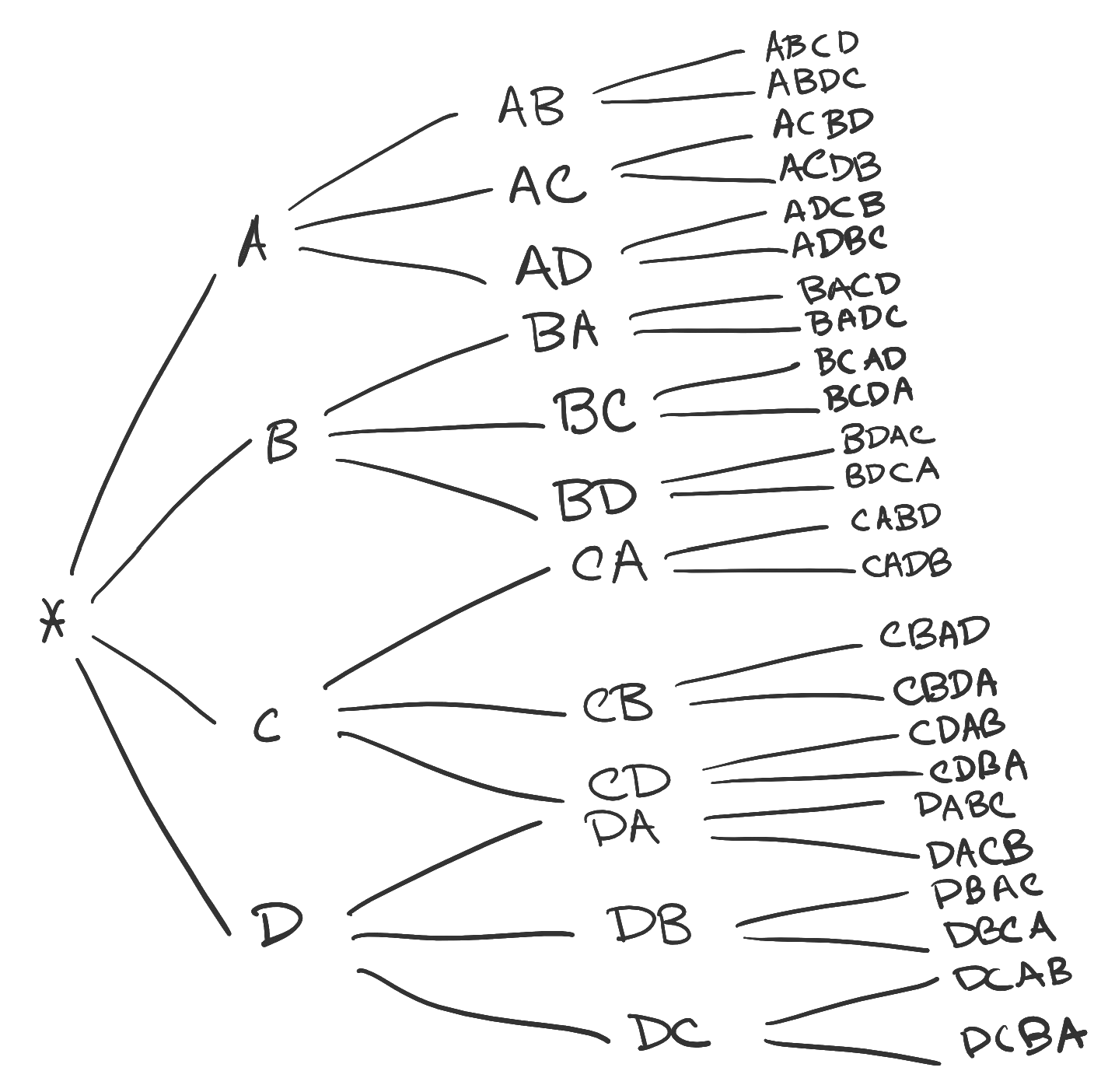

Definition 5.1.1 Let be a positive integer. Then (" factorial") is the product of all positive integers at most : Furthermore, we define .

Anyone questioning the usefulness of will have their questions answered in excruciating detail over the course of Chapters 9-13. If the reader has had calculus, then they may recognize as the denominator of the terms in the Taylor expansion of the function ; it is good for “absorbing” constants created by taking derivatives. However, we are not interested in any of its uses right now.

Example. Here are the values of a few factorials: , , , , , and .

You may have already noticed the recursion at work. Take for instance . We see the value of lurking here: .

In fact, for all positive , we can make the alternative (usually more useful) definition . This is the recursive defintion of the factorial, because we are defining the factorial in terms of the factorial.

Why is this okay? Well, we’re really collapsing a lot of work into two lines: it may make more sense to break it down one step at a time. First, define . This definition will make sense in a later chapter, but remember that mathematical definitions don’t necessarily need to “make sense.”

Next, define . This definition is valid, because the number , the operation , and the number have all been defined. And when we go to define , we have already defined all of these objects as well. And so on. Therefore, the following definition is a valid one.

Definition 5.1.2 Let be a natural number. Then is equal to 1 when , and otherwise.

5.2 Binary numbers

Now that we know how recursion works (or at least have more of an inkling than we did upon starting the chapter), we will see another example of it in the calculation of the binary representation of a number.

When we speak of the number one thousand five hundred eight or , we are implicitly talking about the composition of the number in terms of powers of 10: remembering that for all nonzero .

African and Asian mathematicians were writing in base-10, or decimal notation, as early as 3,000 BCE. Presumably the prevalence of decimal notation throughout human history is due to the fact that most of us have ten fingers and ten toes, making the base convenient. However, computers only have two fingers and two toes.

That last line is a joke, but here’s the idea: Computers read information by way of electrical signals, which are either on or off. So the simplest way for a computer to process information is in terms of two states. Here is a higher-level example: suppose you are writing some code. Is it easier to have a single if-then statement (two possible states), or nine (ten possible states)? This is why base-2, or binary numbers, are so prevalent in computer science.

The first step to becoming adept with binary numbers is to know many powers of . Fortunately, powers of are easy to calculate: simply multiply the last value by to get the next one. (This is a recursive definition for !)

Algorithm 5.2.1 Let be a natural number. To write the -digit binary expansion of :

- If , its binary expansion is .

- If , its binary expansion is .

- If , find the highest number such that . This is the number of binary digits of . Then, the binary expansion of is . The remaining digits , , , , are the digits of binary expansion of , or if the binary expansion of does not contain that digit.

This is perhaps a little intimidating at first glance, so let’s press on with an example.

Example. Let’s calculate the binary expansion of . Our target is neither nor , so we must find the highest power of that is no more than . This number is . Therefore, our binary number will have eleven digits, and right now it looks like

To find the values of the remaining digits , , etc., let’s find the binary expansion of the remainder .

Here’s the recursion, by the way: to find the binary expansion of , we must find the binary expansion of a smaller number.

We repeat the process. The highest power of that divides is . So, the binary expansion of is the nine-digit number Be aware that it is okay to reuse the names for the unknown digits, because the algorithm says they are the same for both numbers.

Next, we find the second remainder The highest power of 2 dividing 228 is Continue the example and see for yourself that the binary expansion of is . (Your remainders after will be , , , and .)

Now, the binary expansion of is and the binary expansion of is

The algorithm tells us what to do with our missing : since it does not appear in the expansions of any of our remainders, it is 0. Therefore,

This examples was made much more tedious than it needed to be so that the recursive steps were laid bare. In practice, you will simply continue subtracting off powers of until none of the target number remains, writing a if you subtracted a particular power of and a if you didn’t. Since we had to subtract , , , , , and , we write a for the eleventh, ninth, eighth, seventh, sixth, and third digits, and a everywhere else.

You have no doubt noticed that our binary numbers are all enclosed in the notation . This is to signify that the , for example, is referring to the number five and not the number one hundred one.

5.3 Octal and hexadecimal numbers

The word octal refers to the base-8 system and hexadecimal refers to the base-16 system. These two bases are useful abbreviations of binary numbers: octal numbers are used to modify permissions on Unix systems, and hexadecimal numbers are used to encode colors in graphic design.

We say “abbreviation” because of the following algorithm.

Algorithm 5.3.1 Suppose is an integer with a base representation and we desire to know its base representation, for some integer . Then we may group the digits of in base into groups of and rewrite each group as a digit in base . The resulting string is the binary expansion of in base .

The idea is that it is easy to go from some base to a base that is a power of the original base. Consider the following example.

Example. Write the number in octal and in hexadecimal.

The starting point will be to write in binary. Since its binary expansion is .

To write in octal, we will regroup the binary digits of by threes because . However, there are eight digits. No worries; we will simply add a meaningless as the leftmost digit of the binary expansion. Those groups are: , , and from left to right. In order, those numbers are , , and ; so, is .

Observe that in a base number system, the digits are . Therefore the highest digit we will see in octal is .

However – what about hexadecimal? Its set of digits is larger than our usual base-10 set. It is standard usage to use through to represent through .

| Decimal digit | ||||||

|---|---|---|---|---|---|---|

| Hexadecimal digit |

Since , to write in hexadecimal we will group the digits in its binary expansion by fours this time. Those groups are and , which represent fourteen and twelve respectively. So, .

To summarize our example, here are four different representations of the number .

| System | Decimal | Binary | Octal | Hexadecimal |

|---|---|---|---|---|

Takeaways

- Recursion is when something is defined or implemented in terms of its own definition or implementation. Recursion is incredibly useful both mathematically and programmatically.

- Given a positive integer , its factorial – the product of all positive integers at most – may be recursively expressed as . Meanwhile, .

- Binary numbers are convenient in programming. We may write a binary number by recursively writing a smaller number in binary first.

- We may express a binary number in octal or hexadecimal by grouping its digits by threes or fours.

6. Sequences and sums

Videos:

- Introduction to sequences

- Recurrence relations

- Directly calculating sums

- Indirectly calculating sums

6.1 Sequences

Remember that a set has no order. For example, and are the same set. Often, we care about the order objects are in. Enter the sequence.

Definition 6.1.1 Let and be some set. A sequence is a function . We write instead of . Here, is the index and is the term. We denote the entire sequence as (when the start and endpoints are stated elsewhere or are unimportant), (for an infinite sequence starting with ), or (for a finite sequence starting at and ending at ). A finite sequence is called a word from the alphabet .

Once again we have chosen a more technical, functional definition where we instead could have said “a sequence is a totally ordered list of objects.” It is worth considering the way in which a function can “port structure” from one set to another. If we wanted a totally ordered set it is hard to imagine a better candidate than . The sequence transfers the order structure from into whatever set we like.

Example. Consider the sequence Observing that each term is the square of its index, this sequence may be represented in closed form by writing .

Not every sequence may be so easily identified in closed form.

Example. Consider the sequence You may recognize this sequence as the famous Fibonacci sequence. There is a closed-form expression for , but you are unlikely to see it by visual inspection. However, notice that each term in the sequence is equal to the sum of the preceding two terms: for instance, .

Therefore instead we can represent the Fibonacci sequence recursively with a recurrence relation:

Once a recurrence relation is established, the terms of a sequence may be calculated iteratively or recursively. Each method has its strengths and weaknesses depending on the context; typically, recursive calculations are more difficult but require storing less information.

Example. Consider the sequence where

To calculate iteratively, we simply calculate each term of until we arrive at . So: , and . Then and Thus, .

In the recursive calculation, we will begin with and repeatedly apply the recurrence relation until we have only known values of the sequence: so, and . First, However, we “don’t know” what is, so we repeat the recursion: Now we substitute in the given values: It is important that we arrive at the same value both ways!

6.2 Sums

Given a sequence, a natural course of action is to add its terms together. To avoid writing a bunch of plus signs, we write for the sum of the members of , starting with the -th member and ending with the -th member.

Example. Consider the sum Notice that is being used for the index rather than . That’s okay! The sum’s value is

We can have sums of sums, and sums of sums of sums, etc. Double sums are common in computer science when evaluating the runtimes of doubly-nested for loops.

Example. Consider the sum This sum is rectangular in the sense that the bounds of one sum are not determined by the other, and so we could do this sum in either order: or Either way, the sum’s value is .

For another example, take Because the bounds of the -sum are dependent on the value of , we should iterate over first:

Each of these sums must be done separately. The first sum, of just one term, is equal to . The second sum is , the third is , and the fourth is ; so altogether the sum is .

We will end on two theorems that are important for manipulating sums, the second of which will be very useful later.

Theorem 6.2.1 [Linear property of summation] Let and be sequences and be a constant value. Then, and

Proof. The associative property of addition – – and the distributive property – – guarantee this theorem.

Example. Suppose we know that Then the value of the sum is Notice that both sums must start and end on the same index.

Theorem 6.2.2 [Recursive property of summation] Let be a sequence. Then

Proof. By definition, By the associative property of addition we may rewrite this as either or proving the theorem.

Example. Suppose it is known that and that Then,

Takeaways

- A sequence is a (possibly infinite) ordered collection of objects.

- Terms of a sequences may be defined in closed form, via a formula, or recursively based on prior terms of the sequence.

- Sums may be calculated directly or indirectly, using linearity or recursiveness.

7. Asymptotics

Videos:

Consider the following example of two programs, and , that process some data. The following table provides the number of computations taken by each program to process data points.

| Program | Program | |

|---|---|---|

| 1 | 1 | 58 |

| 10 | 100 | 508 |

| 20 | 400 | 1008 |

| 50 | 2500 | 2508 |

| 60 | 3600 | 3008 |

| 100 | 10,000 | 5008 |

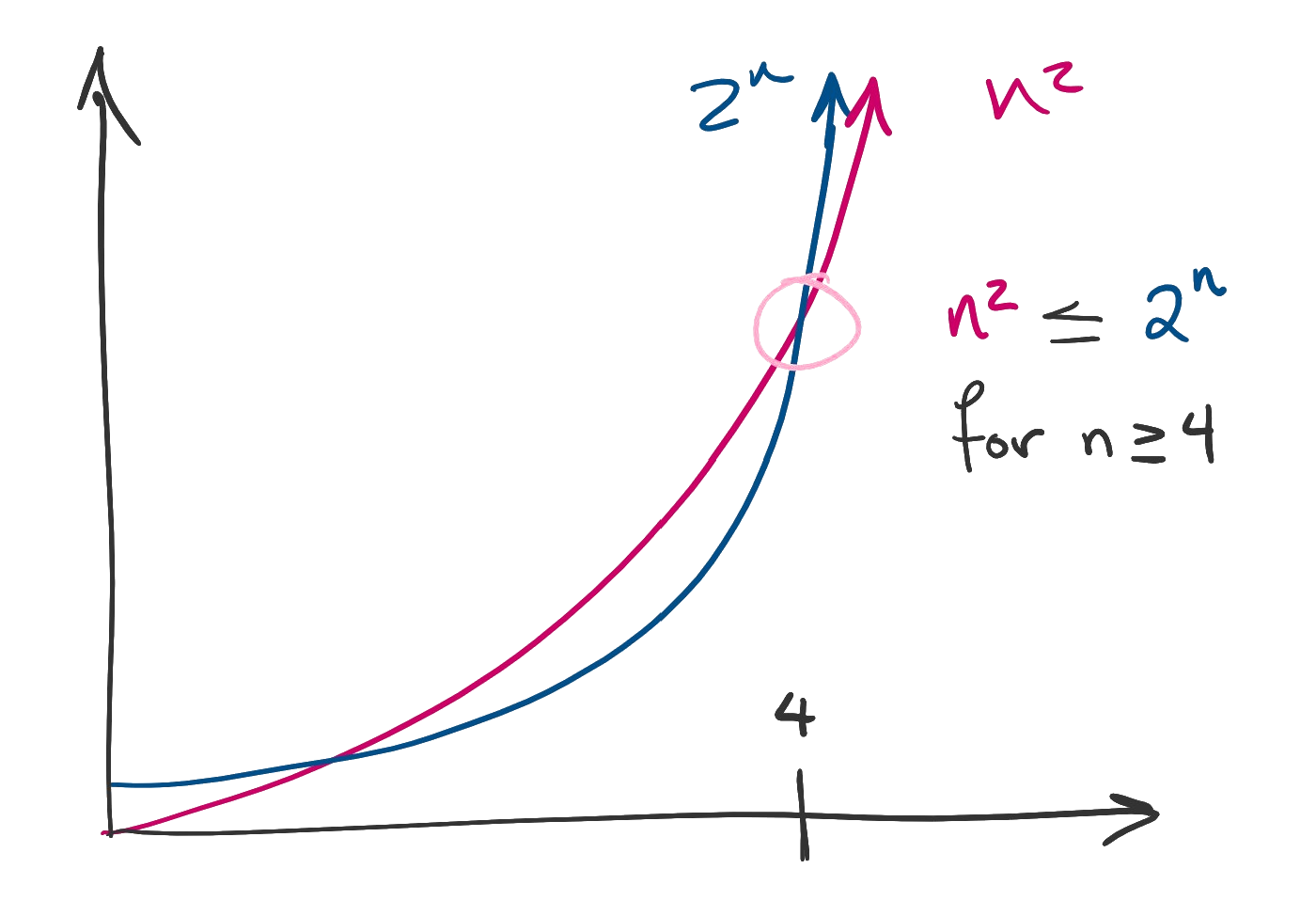

At first, Program looks much better than Program . However, as increases, it becomes clear that is worse at handling large quantities of data than . If and represent sequences of the numbers of steps taken by each program, then and . The way we quantify the relationship between the two sequences is by saying is “big-O” of .

7.1 Growth bounds on sequences

Definition 7.1.1 Let and be terms of sequences. We say is big-O of if there exist a real number and an integer such that for all , We write , even if the equals sign is technically incorrect.

This is another technical definition that can be hard to parse, but the idea is that eventually (after the -th term of the sequence) does not grow faster than . In this way, serves as an upper bound on the growth of .

As we will see again in Chapter 18, there may be many upper bounds. For example in a classroom of twenty-nine students I may say “Here are fewer than thirty students” or “Here are fewer than a million students.” The first statement is obviously more informative. So often you will be asked to find the least upper bound on the growth of ; this is usually a that is the simplest and is itself big-O of all the other options.

Theorem 7.1.2 Any non-negative sequence is big-O of itself.

Proof. Let be a non-negative sequence; that is, for all . Then the statement is satisfied by equality since . If we put , then this statement implies that .

We can form a basic hierarchy of sequences and their big-O relationships. In the below table, a sequence appearing to the left of a sequence means that .

| Slowest | Fastest | ||||||

|---|---|---|---|---|---|---|---|

At the left-most end of the chart, the slowest-growing sequence is . It is slowest-growing because it does not grow at all! In fact, any constant sequence is big-O of any other sequence.

Next is the sequence . In this book, refers to the base-2 logarithm that you might have elsewhere seen written as . Here are a couple of ways to conceptualize this sequence and its relevance to computer science.

- is big-O of the number of digits in the binary expansion of .

- is roughly the number of times a finite sequence of length can be cut in half.

The rest of the functions in the list should be familiar. Notice that all polynomial functions of higher degrees appear between and , and higher-order exponential functions like occur between and .

Figure. Two graphs are compared. Since after a point the blue graph is always above the red graph, the function in red is big-O of the function in blue.

Now we know where basic functions fit into the big-O hierarchy, but what about more complicated expressions like ? The following theorem will help.

Theorem 7.1.3 Let and be sequences.

- If is a nonzero constant, then .

- Suppose there exists a sequence such that and . Then, is big-O of as well.

- Suppose there exist sequences and such that and . Then, .

In plain English, this theorem says:

- Constant multiples do not affect growth rate.

- When adding two sequences, the larger absorbs the smaller. In other words, one must only look at the highest-order term of a sum.

- Growth rates are multiplicative; “big-O of the product is the product of the big-O’s,” though that language is very informal.

It is worthwhile to read the below proof of the theorem, but do not worry if you don’t understand it. Read it once, then skip it until after Chapter 8. You can work the problems at the end of the chapter even if you don’t totally understand the following proof.

Proof. To prove the addition rule (second bullet point), suppose and . That means there exist witnesses and where for all and for all . Then, for all . In other words, this inequality holds for all far enough along that both the big-O relationships hold. By definition, this means is big-O of .

To prove the multiplication rule (third bullet point), suppose there exist sequences and such that and . Therefore there exist witnesses and where for all and for all , so that for all ,

To prove the theorem about constant multiples (first bullet point), apply the multiplication rule where is the constant sequence . Observe that this sequence is big-O of the sequence by taking and . So, since and , as claimed.

Finally, we have enough tools to do some examples!

Examples. Consider the sequence whose terms are given by the formula . We would like to find the simplest function of lowest order such that .

Notice that is a sum of three terms: , which is big-O of , and which is big-O of . Only the highest-order of these matters; both the constant term and the linear term are big-O of , but not the other way around. Therefore .

What is the simplest and lowest-order function such that is big-O of ?

Theorem 7.1.3 says we only need the highest-order term of each factor, and then we must multiply those terms together. The highest-order term of the first factor is big-O of and the second factor is big-O of ; so, the whole sequence is big-O of .

7.2 Other growth relationships

We will briefly mention two other growth relationships here. Recall that saying is to say that is an upper bound on the growth rate of . Then saying (big-Omega) is to say that is a lower bound on the growth rate of , and $a_n = (big-Theta) is to say that and grow in the same way.

Definition 7.2.1 Let and be sequences. Then

- if , and

- if and .

Takeaways

- “Big-O” relationships are a way to quantify when one sequence is eventually bounded in its growth by another.

- There is a hierarchy of basic sequences that may be committed to memory.

- Beyond the basic sequences, three rules govern the relationships between more complicated functions: constant multiples do not matter; addition is absorptive; and multiplication is, well, multiplicative.

8. Proof by induction

Review.

- Basic algebra! This is probably the most algebraically taxing chapter in the book.

- Chapter 4: Proofs and the definition of divisibility.

- Chapter 5: Recursion.

- Chapter 6: Sequences and sums, particularly sigma notation and the recursive property of summation.

It is time to come full circle on our discussion of proofs and recursion to establish the recursive proof technique, proof by induction.

What are the two ingredients to knocking over a line of domino tiles? Each domino must be sufficiently close to the next, and you must tip over the first one.

Proof by induction proceeds in much the same way. We want to prove some statement holds for every natural number past a base value . We prove the following two claims:

- The base case: That is true. The base case is easy to prove - just plug in and make sure the statement is true!

- The inductive step: That the inductive hypothesis, , for some integer , implies .

Once both of these statements are proven we will have , , , and so on; we will know to be true for all .

8.1 Inductive proofs involving sums